The drive towards the Metric system continues, though now by way of erosion, rather than replacement. Here are some reasons to resist it.

In the last century, the world leapt to adopt many radically new things: airplanes; automobiles; computers; phones; and television, to name just a few. When introduced, these things upset ancient patterns of life, and came with the usual array of unintended consequences, but we jumped for them anyway, because they gave us something we really wanted, for better or for worse. And this is a familiar phenomenon, throughout human history. Oh, sometimes we’re a little slow on the uptake, but just about by definition, we don’t need to be forced into accepting things that work. That brings us to the subject of this essay. What I’ll be addressing here is a tool that has long been touted as being a vast improvement over its predecessors, as being simple and easy and logical, yet which has never been accepted, anywhere, except by force of law. That tool is the Metric System.

I know, I know, we’ve all been told since infancy about the supposed advantages of this system, and about the supposed barbaric inefficiencies of conventional measure, but stay with me a while here. Most of the supposed advantages of Metrics are shared by conventional measure. Most of the so-called inefficiencies and illogic of conventional measure are either obscure aspects that almost no one uses, or actual advantages that have gone unrecognized in the rush to Metrication. In short, Metric measuring isn’t nearly as good as it’s cracked up to be, and conventional measure is a lot better than typically assessed. This is true right through both systems, so it’s tough to decide where to start in detail. So I’ll commence with one of my favorites: Temperature.

Daniel Gabriel Fahrenheit was a German-born scientist, inventor of the first practical thermometer. His first big technological breakthrough was in discovering the effect of barometric pressure on the boiling point of water. In order to have a meaningful standard temperature for this point, he came up with a standard barometric pressure. The other big breakthrough was in establishing the freezing point of water. Here he discovered that a liquid can exist in three states simultaneously, the so-called “triple point”. That is, a substance can be in a liquid, solid, and gaseous state, all at once, in equilibrium. Nailing this point down had been beyond previous researchers, who had been trying in vain for an absolute point.

With these two fundamental parameters established for the fundamental substance of water, Fahrenheit set about the practical matter of actually constructing a thermometer. This involved a lot of experimentation, both in terms of materials and design, but he eventually settled on the same combination of mercury-in-a-glass-tube that we use today. That left the matter of calibration, and the scale he chose has been one of the primary whipping boys of metrication advocates. Instead of following the “logical” route and calling zero the freezing point of water and 100 the boiling point, he assigned the weird values of 32 and 212 degrees for these points. Illogical, right?

Maybe not. Consider, first, that this man was smart enough to come up with the principles of this instrument in the first place; it is vanishingly unlikely that he would skip past this detail without excellent reason. And he had excellent reason. He realized that, though water made for handy reference points, its behavior had little to do with how humans perceive temperature. In the northern hemisphere, where most of the world’s population lives and lived, we experience a range of temperature which is well below the freezing point of water at one end, and (fortunately) well below the boiling point of water at the other. Calibrating a thermometer relative to water makes about as much sense as calibrating a clock to the day on Mars –– it has no connection to us. So Fahrenheit measured what people actually experience, averaging extreme lows at 0, and extreme highs at 100, then left the scale open at either end. To this day, this range is useful for humans everywhere, on an intuitive level. We know that anything over 100 degrees is getting dangerously hot, and that anything under 0 is getting dangerously cold. We can relate to this scale.

It is also significant that Fahrenheit used a 0-to-100 scale at all. Remember, this was long pre-metrics, but people were accustomed, since antiquity, to make use of a “100” scale. Think centurions, for instance, or century, or percent. Hundreds are often the handiest way to measure and bracket significant ranges, and people make use of this, when it makes sense. Hundreds can easily be converted to higher or lower levels, so we have our money in decimal values, as well as very fine measurements, as in thousandths or parts-per-million. Fahrenheit recognized the value of hundreds; he just put them to work relative to human perceptions, rather than forcing humans to translate their perceptions relative to the characteristics of water. Unlike Mr. Celsius, who apparently missed all this. Mr. Fahrenheit made it a point to relate the fruits of his labors to human beings, and he did so using a decimal range for typical living conditions. This range was fine-grained enough to mark perceptible changes in temperature, but coarse-grained enough that it wasn’t unwieldy. Mr. Celsius locked himself into a range that had nothing to do with people, and one consequence of this is that Celsius’ scale is much coarser than Fahrenheit’s, almost twice as coarse. In practice, this means that if you take the easy road, and just jump in whole degrees when taking readings, you will miss significant changes. And if you don’t, you’ll be dealing, unnecessarily, with decimal points.

Some Celsius asides here: did you know that the original scale had 0 as the boiling point, and 100 as the freezing point? That the French National Assembly adopted it into the Metric system on April Fool’s Day? And did you realize that the Celsius scale is no more intrinsically “metric” than the Mr. Fahrenheit’s? Either one could have been used as calibration for 1 cubic centimeter of water.

Now the question must surely arise: why not count everything by hundreds? Why does so much of conventional measure veer off into dozens and sixteenths and scores? In answer, let me tell you about some French carpenters.

I was in Montreal years ago, having dinner with a couple of carpenters who were visiting from near Paris. They were describing a brilliant technique whereby they had vastly simplified layout for their jobs. “Instead of using 1 meter as a standard,” one of them said, “we use 1.2 meters. That way we hardly ever have to deal with decimal points, as 12 can be divided by 2, 3, 4, 6, 9, and itself.”

And I said, “You mean, like the foot?”

And I swear, they both slapped their foreheads and made various French exclamations of astonishment. The most significant thing for me was that they knew that the foot has twelve inches in it. They had just never considered that there might be a logical reason for it. But 1.2 meters, well, that made sense.

We had a very productive discussion that night, getting into the logic behind radical notions like sixteenths and eighths, which allow for useful increments of change, instead of the orders-of-magnitude leaps that the Metric system locks one into. And I told them about an old framing square I had, which was primarily in inches and fractions, but had a little hundredths scale in one corner. The idea here is that one can calculate the constants for things like rafter runs very precisely, in hundredths of an inch, then use a pair of calipers to find the nearest sixteenth or eighth, once the run had been multiplied out to full length. They got it right away, saw how the square makes use of decimals where they are useful –– for fine-grain tweeziness –– but lets the operator escape into easier- to-see-and-work-with fractions. By the way, the 1.2 meters trick might be common in France, for all I know, as I have heard of it from other people. If so, it’s a clear case of common sense finding a way to deal with what is only ostensible logic.

This faux logic runs right through the Metric system. We are at first blinded by its seeming simplicity and rationality, but close examination reveals that it has no advantages, with two exceptions: it provides a common language for scientific exchange, much as Latin used to; and it provides a convenient homogenization for multi-national companies. Neither of these reasons are enough to displace a system that already works at least as well. Americans have built airplanes, oil rigs, skyscrapers, and lots of other complex items, and you never hear about rooms full of people, slaving away at dealing with “cumbersome fractions” to get the job done. For simpler, but no less vital projects, like housebuilding, we have the classic 16” centers relating easily to 8’ plywood, and plumbing flow rates that manage quite nicely with gallons instead of liters. Why mess with what works? In our rigging shop, we often have to deal with assorted forms of measurement, but we have pocket calculators that can do this at the touch of a button. We get along just fine with Metrics; we just have no need to adopt them exclusively.

On a larger scale, there have been some spectacular rational/metric disasters, like the Mars orbiter crash. Predictably, many people blamed the rational measurement system, even though Metrics are the latecomers to aerospace. For perspective, there have been many, many other engineering disasters, in both systems, due to misplaced decimal points. The Mars crash was about sloppy engineering.

So much Metric propaganda has to do with how cumbersome and confusing conventional measure is supposed to be, but this is misleading at best; Metrics gain simplicity only at the expense of becoming simplistic, so that the user is stuck with inflexible parameters, and no way to change things. And what’s at least as bad, those same parameters are touted, incessantly, as being all one needs, so if we get confused, we think it must be our fault.

Contrast this with the wonderful array of measuring tools in conventional measure. Sure there are some that are so much historical baggage, but by and large people came up with and perservered with these tools because they were useful. Decimals form an important part of conventional measure, but they lack power, versatility, and the ability to “shift gears” the way other portioning methods can.

Much has been made of the inevitable triumph of the Metric system, and of how the U.S. is nearly alone in avoiding it. But people all over the world are backsliding, especially in English-speaking countries, but also notably Japan, where their traditional measure is resurfacing. Or in China, where people have come up with the metric equivalent of cups, pints, and quarts (for more on this, and many other topics, read About the Size of It, by Warwick Cairns: https://www.amazon.com/About-Size-Warwick-Cairns/dp/0330450301).

The reason for all this is that Metrics are pidgin measurement – very simple, but therefore very limited in range of expression. Schoolteachers like millimeters because they are easy to teach; fortunately that logic has yet to pervade, for the most part, things like history, geography, and mathematics. Or language: think how much simpler and streamlined things would be if we only had to learn pidgin English, with a hyper-limited vocabulary and hyper-simple syntax. No more cumbersome participles and infinitives, no more illogical dependent clauses or outmoded parenthetical expressions. We would be free at last of the burdens that make English such a powerful, versatile, adaptive language. Judging from the way many people speak, this notion has significant appeal, but the disadvantages of such an approach are ludicrously obvious. Our mistake has been in assuming that measurement can be similarly “simplified” without being similarly disabled.

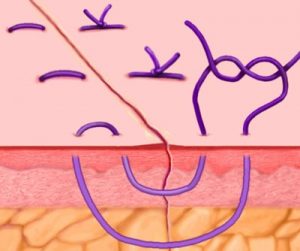

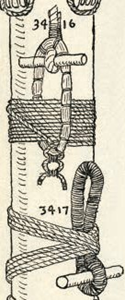

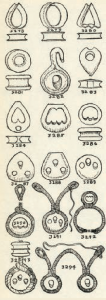

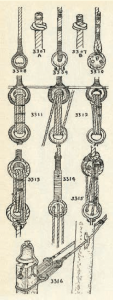

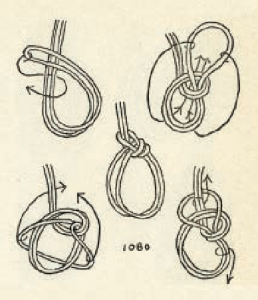

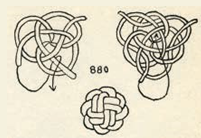

Two other examples of database adaptations are the knife lanyard knot (#787), and an original button (#880). Both of these knots were originally meant to be primarily decorative, and they are indeed lovely things. But a few years ago, riggers began making “soft shackles” in Spectra/Dyneema. These fabulously strong button-and-becket constructions have displaced steel shackles in many applications. A soft shackle that terminates in a lanyard knot, properly tied, can be counted on to hold at over 100% of the strength of the cord- age it is tied in. And the version that terminates in a two-strand version of the #880 will hold at close to 200%.

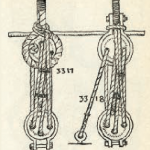

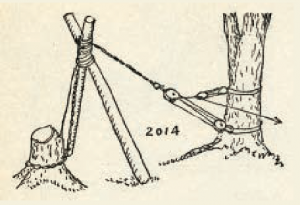

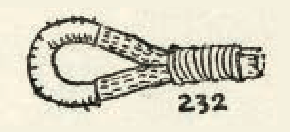

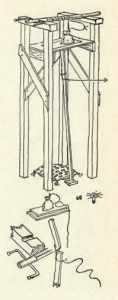

Two other examples of database adaptations are the knife lanyard knot (#787), and an original button (#880). Both of these knots were originally meant to be primarily decorative, and they are indeed lovely things. But a few years ago, riggers began making “soft shackles” in Spectra/Dyneema. These fabulously strong button-and-becket constructions have displaced steel shackles in many applications. A soft shackle that terminates in a lanyard knot, properly tied, can be counted on to hold at over 100% of the strength of the cord- age it is tied in. And the version that terminates in a two-strand version of the #880 will hold at close to 200%. made around a block with 3-strand rope. Contemporary riggers simply adapted the structure to 12-strand braided rope, and gave it the option of an adjustable eye.

made around a block with 3-strand rope. Contemporary riggers simply adapted the structure to 12-strand braided rope, and gave it the option of an adjustable eye.